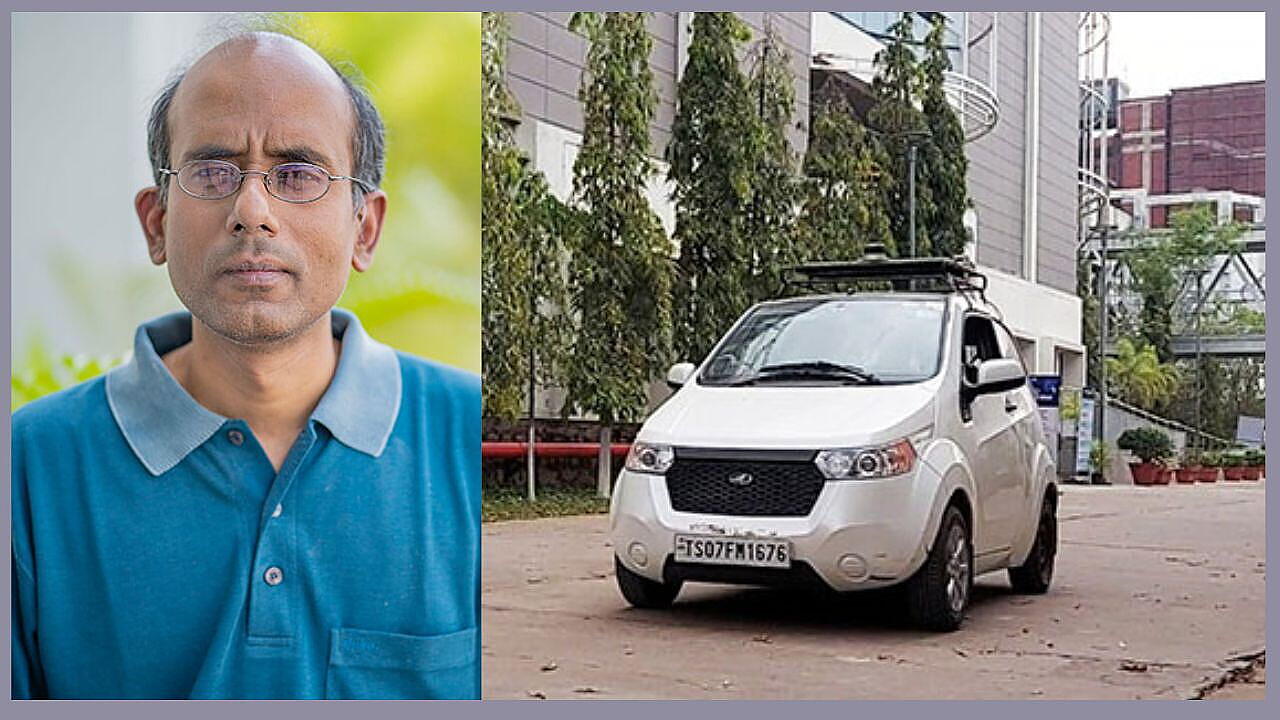

IIIT Hyderabad has unveiled its advanced self-driving electric vehicle, showcasing cutting-edge autonomous driving capabilities combined with human-like navigation. The car performs point-to-point autonomous driving with collision avoidance over a wide area, leveraging 3D LIDAR, depth cameras, GPS, and AHRS (Attitude and Heading Reference System) for spatial orientation. Equipped with a unique feature, the vehicle accepts Open Set Natural Language commands, enabling it to follow contextual verbal instructions like, “Stop near the white building,” for seamless navigation.

According to Prof Madhava Krishna, Head of the Robotics Research Centre and the Kohli Center for Intelligent Systems (KCIS) at IIIT Hyderabad, the vehicle employs SLAM-based point cloud mapping for environmental mapping and LIDAR-guided state estimation for real-time localisation.

Advanced trajectory optimisation frameworks, modelled on predictive control, allow the vehicle to generate and execute optimal driving paths. These frameworks are initialised with data-driven models to speed up decision-making, enhancing its performance in dynamic environments.

Open Set Navigation: A Human-Like Approach

Drawing inspiration from human navigation, IIIT Hyderabad’s innovation integrates environmental landmarks into its navigation system. Instead of relying heavily on high-definition maps or high-resolution GPS, which are resource-intensive, the car uses open-source topological maps like OpenStreetMaps (OSM) combined with semantic language landmarks, such as “bench” or “football field.” This approach mimics cognitive localisation processes, offering an “open-vocabulary” feature for zero-shot generalization to unfamiliar locations.

This scalable method addresses limitations of traditional mapping systems, such as inaccuracies in OSM maps and the absence of dynamic landmarks like open parking spaces. By leveraging foundational models with semantic understanding, the car bridges the gap between classical and modern approaches to autonomous driving, making navigation intuitive and efficient, Krishna said.

Integrating Language With Vision For Planning

IIIT Hyderabad’s Autonomous Navigation Stack integrates mapping, localisation, and planning, enhanced by a vision-language model that processes real-time visual data alongside encoded natural language commands. This system enables the car to respond to commands such as “Park near the red car,” while overcoming real-world constraints, such as avoiding non-road areas.

To ensure reliability, the system features a differentiable planner within a neural network framework, enabling end-to-end training. This architecture optimises both prediction accuracy and planning quality, addressing challenges like prediction errors from upstream perception modules.

Pioneering NLP, Vision-Language Models

The standout feature of this innovation is its lightweight vision-language model that blends visual scene understanding with natural language processing. By incorporating a neural network framework, the system ensures seamless integration of perception, prediction, and planning, allowing for precise, collision-free navigation in complex environments.

Bridging Future Of Autonomous Driving

IIIT Hyderabad’s in-house prototype represents a significant leap in autonomous driving research, combining classical methods with post-modern solutions in open-world understanding. This vehicle not only sets a benchmark in autonomous navigation but also opens new avenues for scalable, real-world deployment of self-driving systems.

Also Read:

Continental Unveils Cutting-Edge Software For Autonomous Mobile Robots In Partnership with AWS